Load data files

Data is from ics.uci.edu/ml

Opinion Reviews.

data.battery <- read.table("data/battery-life_amazon_kindle.txt.data", sep = "\n", stringsAsFactors = FALSE, strip.white = TRUE) data.windows7 <- read.table("data/features_windows7.txt.data", sep = "\n", stringsAsFactors = FALSE, strip.white = TRUE)

## Warning in scan(file, what, nmax, sep, dec, quote, skip, nlines, ## na.strings, : EOF within quoted string

data.keyboard <- read.table("data/keyboard_netbook_1005ha.txt.data", sep="\n", stringsAsFactors = FALSE, strip.white = TRUE) data.honda <- read.table("data/performance_honda_accord_2008.txt.data", sep = "\n", stringsAsFactors = FALSE, strip.white = TRUE) data.ipod <- read.table("data/video_ipod_nano_8gb.txt.data", sep = "\n", stringsAsFactors = FALSE, strip.white = TRUE)

library(gdata) sentences <- c() for (i in 1:dim(data.battery)[1]) {sentences <- c(sentences, trim(data.battery[i,1]))} for (i in 1:dim(data.windows7)[1]){sentences <- c(sentences, trim(data.windows7[i,1]))} for (i in 1:dim(data.keyboard)[1]){sentences <- c(sentences, trim(data.keyboard[i,1]))} for (i in 1:dim(data.honda)[1]) {sentences <- c(sentences, trim(data.honda[i,1]))} for (i in 1:dim(data.ipod)[1]) {sentences <- c(sentences, trim(data.ipod[i,1]))}

sentences[1:10]

## [1] "After I plugged it in to my USB hub on my computer to charge the battery the charging cord design is very clever !" ## [2] "After you have paged tru a 500, page book one, page, at, a, time to get from Chapter 2 to Chapter 15, see how excited you are about a low battery and all the time it took to get there !" ## [3] "NO USER REPLACEABLE BATTERY, , Unless you buy the extended warranty for $65 ." ## [4] "After 1 year you pay $80 plus shipping to send the device to Amazon and have the Kindle REPLACED, not the battery changed out ." ## [5] "The fact that Kindle 2 has no SD card capability and the battery is not user, serviceable is not an issue with me ." ## [6] "Things like the buttons that made it easy to accidentally turn pages the separate cursor on the side that could only select lines and was sometimes hard to see the occasionally awkward menus the case which practically forced you to remove it to use it and sometimes pulled the battery door off ." ## [7] "The issue with the battery door opening is thus solved, but Amazon went further, eliminating the door altogether and wrapping the back with sleek stainless steel ." ## [8] "Frankly, I never used either the card slot or changed the battery on my Kindle 1 but I liked that they were there and I miss them on the Kindle 2, even though, I have to admit, I dont actually need them .\n Its also easy to charge the Kindle in the car if you have a battery charger with a USB port ." ## [9] "You cant carry an extra battery , though with the extended battery life and extra charging options its almost a non, issue , and you cant replace the battery because of the iPod, like fixed backing .\n For one thing, theres no charge except battery power no pun intended !" ## [10] "Before purchasing, I was obsessed with the reviews and predictions I found online and reading about some of the critiques such as the thick border, the lack of touchscreen, lack of battery SD slot, lack of a back light, awkward difficult keyboard layout, minimally faster page flipping, and the super, high price ."

library(Matrix) library(gamlr) library(parallel) library(distrom) library(textir) library(NLP) library(tm) library(SnowballC)

corpus <- VCorpus(VectorSource(sentences)) corpus <- tm_map(corpus, content_transformer(function(x) iconv(x, to='UTF-8-MAC', sub='byte')), mc.cores=1) corpus <- tm_map(corpus, content_transformer(removePunctuation), lazy = TRUE) my.stopwords <- c(stopwords('english'), "the", "great", "use") corpus <- tm_map(corpus, removeWords, c(stopwords('english'), "the", "great")) corpus <- tm_map(corpus, removeNumbers) corpus <- Corpus(VectorSource(corpus)) dtm <- DocumentTermMatrix(corpus, control=list(minWordLength=4, minDocFreq=4)) dtm

## <<DocumentTermMatrix (documents: 245, terms: 1492)>> ## Non-/sparse entries: 4171/361369 ## Sparsity : 99% ## Maximal term length: 14 ## Weighting : term frequency (tf)

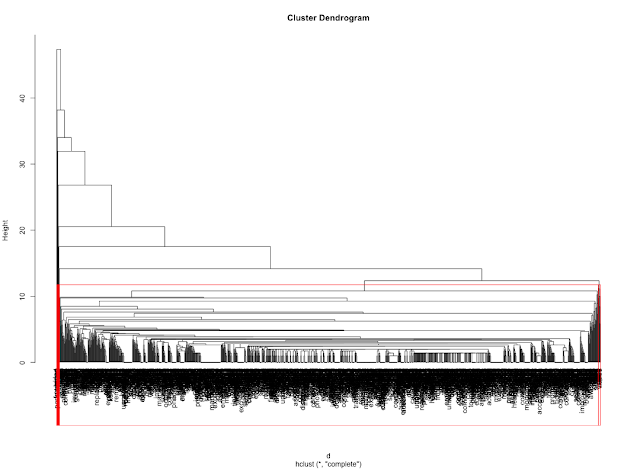

df <- as.data.frame(inspect(dtm)) m <- t(as.matrix(df)) d <- dist(m)

No comments:

Post a Comment